Super Resolution (SR) technology — which is a catch-all term used for techniques that are applied to still images or video to enhance resolution and achieve as close to the same quality as footage shot on HD devices as possible — has been around the broadcast landscape for a few years.

It was first deployed in high-end TVs, mostly in the form of dedicated (and very powerful) processors designed to perform AI-powered super resolution on non-4K content right at the point of delivery. This was done in order to replace classical interpolation methods traditionally employed in the upconversion process.

But is it time for broadcasters to start thinking about migrating super resolution to the headend to guarantee the same quality across devices? We think it is. Here’s why.

Credit: Satish Vishwanath

Lights, camera, shutdowns.

2020 was a challenging year for the broadcast industry, to put it mildly.

The pandemic shut down essential parts of the content production pipeline, including sporting events and film sets. At the same time, more and more people were tuning in to watch something (anything!) in the midst of months-long periods of isolation and quarantine. With almost nothing new to air, broadcasters realized that they could leverage years’ worth of archival content to keep audiences entertained.

They just had to make sure the quality was up to scratch first.

Super resolution to the rescue

SR is a challenging computer vision technology given its undetermined nature — meaning that, for all intents and purposes, there are an infinite number of ways to go about it.

Hundreds of such techniques have been proposed over the years, mostly from the scientific community and academia. However, most are woefully unsuited to practical applications in the broadcast industry due to their runtime speed limitations. Only a few of these techniques offer a good balance between accuracy and complexity, making them a good fit for broadcasters.

A good super resolution technique for real-world input has been designed to fix degradations. While certain kinds of degradations lead to an irretrievable loss of information, others, such as motion compression, warping, blurring, down-sampling and noise, can be addressed algorithmically.

Real-world applications

There are several reasons super resolution is a really good idea:

- Archival footage is often degraded because, generally speaking, the older the footage, the worse the quality.

- Mobile tablets are extremely popular for watching streaming content and these do not (yet) benefit from the AI enhancement offered by some TVs.

- There are no standards for AI enhancement in consumer TVs today, which means the picture quality at the end consumer is essentially unpredictable. By increasing the quality at the headend, everyone benefits.

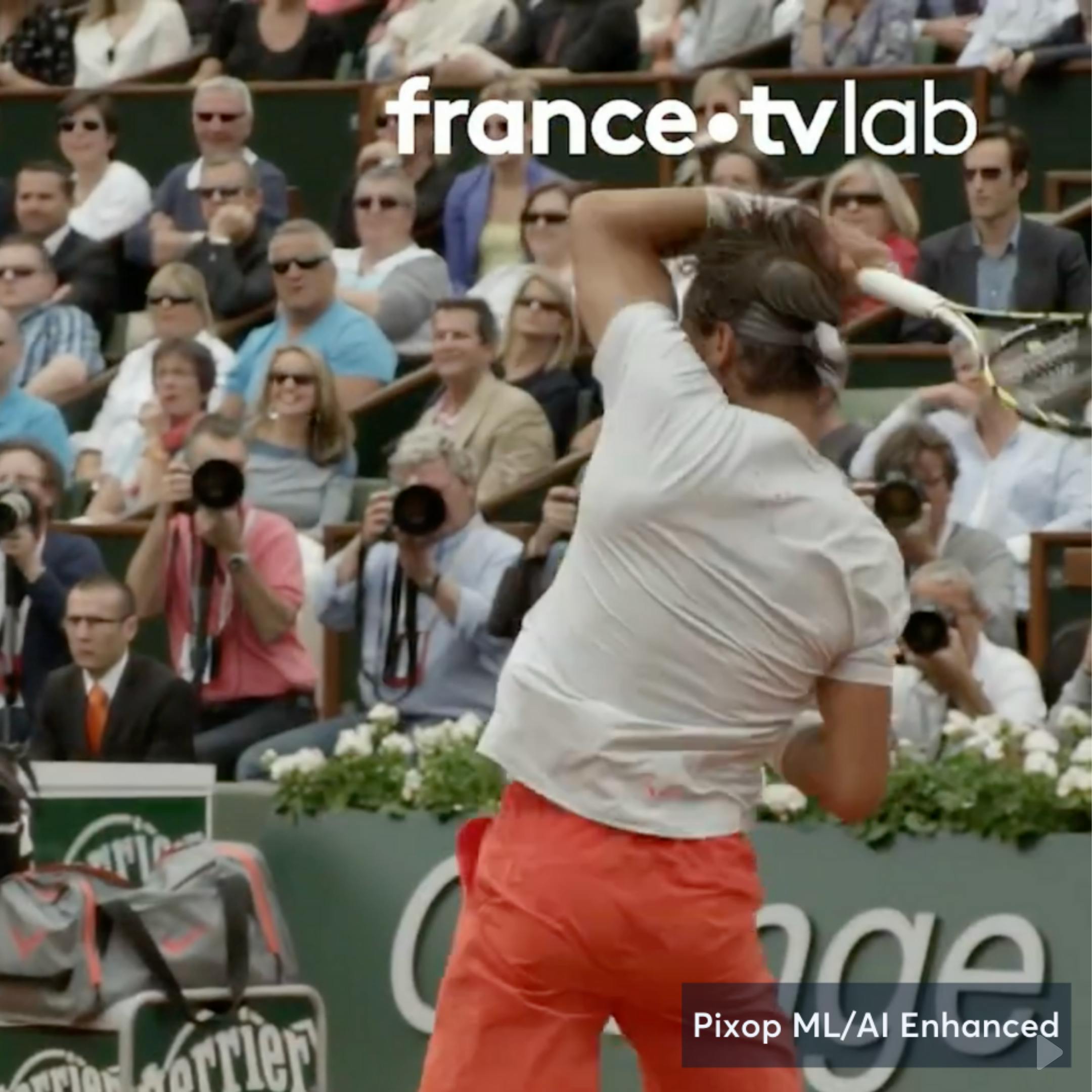

Pixop's Deep Restoration and Super Resolution filters were applied.

One such experiment was carried out by Pixop, France tv Lab and Harmonic Inc in September 2020.

Harmonic used the Pixop Deep Restoration and Pixop Super Resolution filters to upscale tennis clips to see how SR techniques behaved in an SD to HD to UHD 4K upscaling scenario. Both these filters are powered by state-of-the-art machine-learning and AI. The experiment was also conducted to evaluate how Pixop's filters fared when compared to the best-in-class filters (typically Lanczos filters) commonly used today.

Pixop Deep Restoration and Super Resolution were combined into a hybrid workflow, taking advantage of the best aspects of each.

A deep restoration was first applied to the SD content to restore texture, details and upscale it to full HD. The Super Resolution filter was then applied to generate UHD 4K content with higher sharpness and edge preservation.

In the case of a full HD feed, the super resolution algorithm could be applied without the preliminary deep restoration step. With some older clips that were also tested, an additional step was applied to denoise footage prior to both deep restoration and super resolution using Pixop’s Denoiser.

The upscaled results were then compared to commonly used interpolation filters, as well as the upscaling of an SD or HD feed in the 4K TV itself.

The big picture

When the results were evaluated, we found that Pixop’s Deep Restoration and Super Resolution filters fared better than either bilinear upscaling or Lanczos filters, with the SR content looking like ‘true’ native 4K.

The SR upscaling was better in terms of sharpness, contrasts and textural details while avoiding common problems such as aliasing or temporal instability. The upscaling provided by 4K televisions was found to be lacking because it simply cannot offer a “true” 4K experience similar to what SR can achieve. Furthermore, any compression artifacts as a result of bandwidth constraints are amplified by this upscaling process, leading to a more disappointing 4K experience.

The experiment leads us to conclude that embedding deep restoration and super resolution algorithms in the headend is the way to go, as long as quality 4K content can be delivered at a reasonable bandwidth to end-users.

The computing requirements of the Pixop ML-based super resolution filter can be supported by powerful CPU servers already deployed in the cloud to achieve real-time processing at 35fps for HD and 12fps for 4K currently. Furthermore, each year, more and more technologies and codecs that allow 4K resolution to be delivered at a reasonable bandwidth are released. All of these reasons are why we strongly believe that moving the SR process to the headend (instead of end-user devices) is going to be the future of upscaling and upconversion in the broadcast industry.